(WS News) –Anthropic has taken a decisive step in the evolution of AI productivity tools with the launch of Claude Cowork, a new agent-based feature designed to bring the power of Claude Code to non-technical users. Instead of focusing on chat responses or code snippets, Cowork operates as a persistent AI assistant that can read, edit, and create files directly on a user’s computer — all through simple natural-language instructions.

This launch signals a broader shift in artificial intelligence: the move from conversational bots to autonomous, action-oriented agents that actually do work.

What Is Claude Cowork?

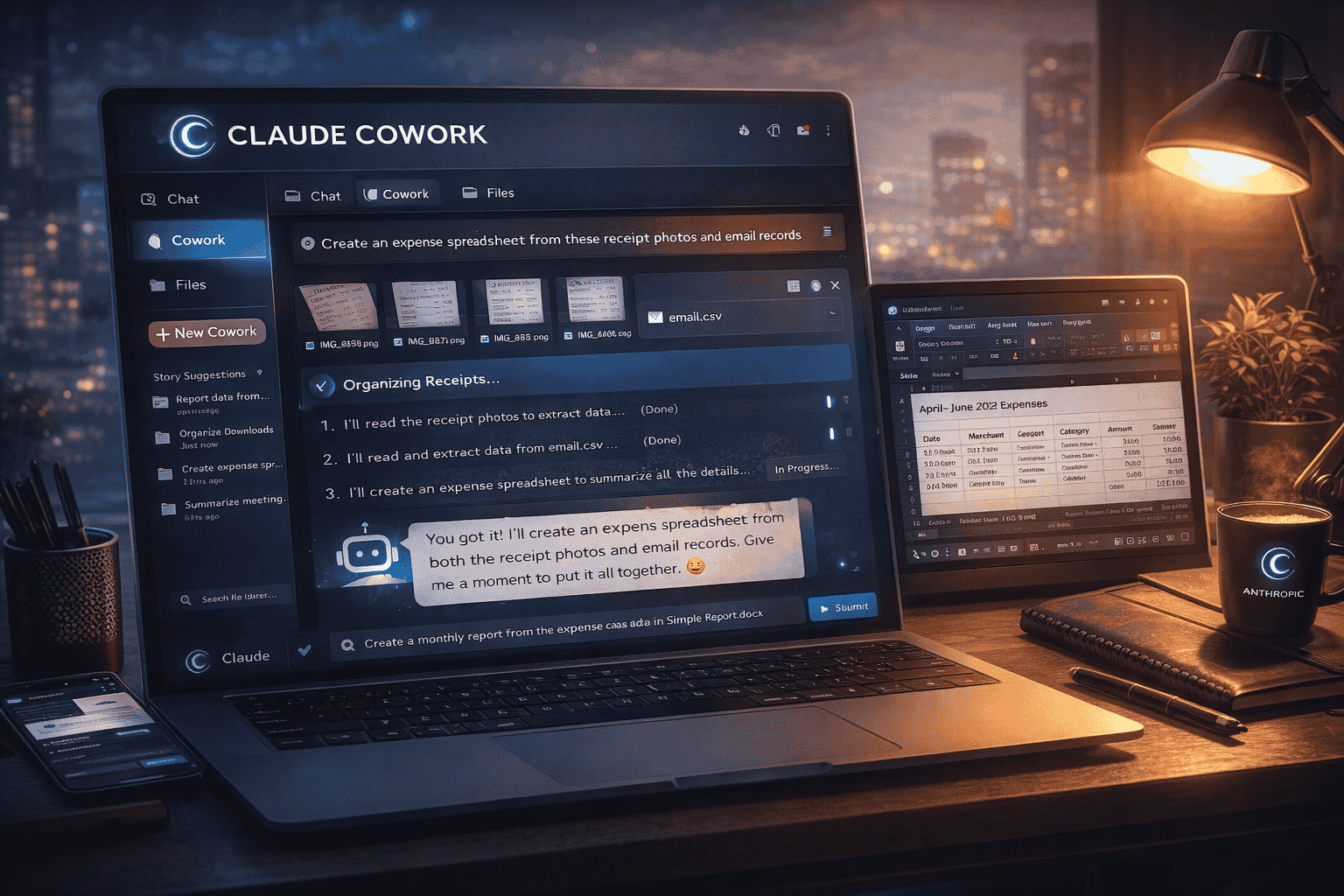

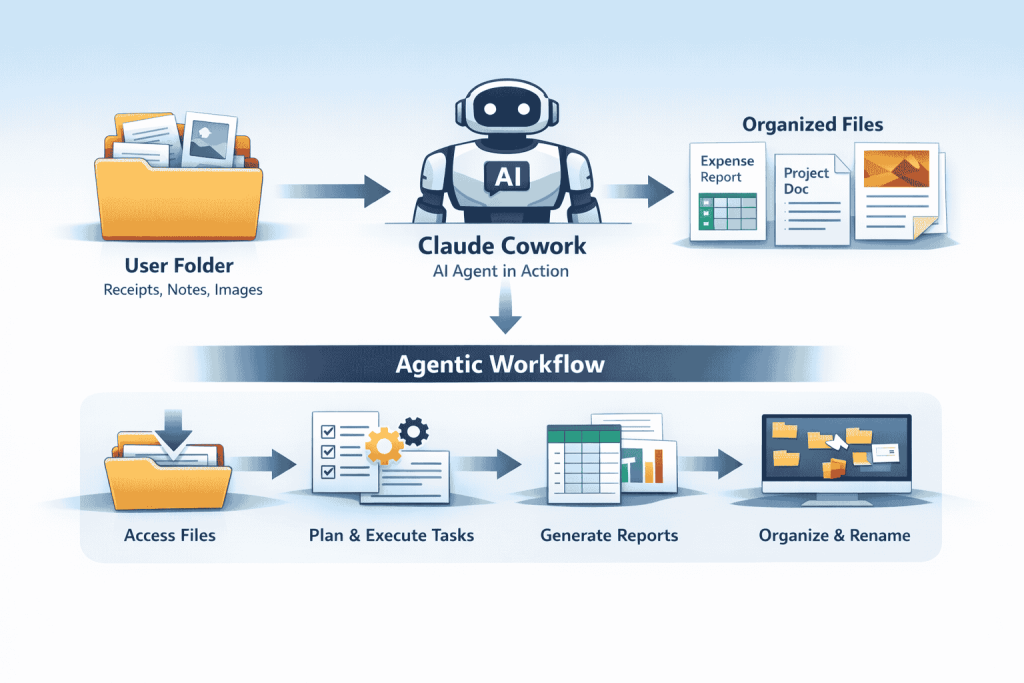

Claude Cowork is an AI agent integrated into the Claude Desktop application for macOS. Unlike a traditional chatbot, Cowork is granted access to a specific folder on a user’s computer. Within that sandboxed environment, Claude can analyze files, reorganize folders, generate documents, and complete multi-step tasks without requiring constant user input.

Anthropic describes Cowork as “Claude Code for the rest of your work,” and that framing is accurate. The tool is built on the same Claude Agent SDK as Claude Code, but removes command-line complexity and developer-oriented workflows. The result is a system that feels less like programming and more like delegating tasks to a digital coworker.

How Cowork Actually Works

Once a user assigns a folder, Cowork enters what Anthropic calls an agentic loop. Instead of responding once and stopping, Claude:

- Creates a plan to complete the task

- Executes steps in parallel

- Checks its own output

- Requests clarification if needed

Users can queue multiple tasks at once and even modify instructions while Cowork is still working. This fundamentally changes how people interact with AI. Rather than micromanaging prompts, users define outcomes.

Typical use cases include:

- Organizing messy folders and renaming files intelligently

- Creating expense spreadsheets from receipt screenshots

- Drafting reports from scattered notes

- Preparing presentations and structured documents

This is not “AI chat.” It’s AI execution.

Built by AI, in Record Time

One of the most striking aspects of Cowork’s launch is how quickly it was built. According to Anthropic insiders, the entire feature was developed in approximately one and a half weeks — and most of the code was generated using Claude Code itself.

This creates a recursive feedback loop where AI tools accelerate the development of new AI tools. If this pattern continues, the pace of innovation will not be linear — it will compound.

That matters because it gives Anthropic a structural advantage. Companies that successfully use their own AI internally will iterate faster than competitors relying on human-only development cycles.

Who Cowork Is For (and Who It Isn’t)

Cowork is clearly aimed at knowledge workers, not developers. Marketers, analysts, administrators, managers, and freelancers stand to benefit the most. Anyone dealing with files, documents, reports, or routine office tasks can offload cognitive overhead to the agent.

That said, Cowork is not for careless users.

Anthropic repeatedly warns that vague or poorly worded instructions can lead to unintended actions — including deleting files. While Claude will only access folders it has been explicitly granted, the power to act also introduces risk.

This tool rewards clarity. If you’re sloppy with instructions, you’ll get sloppy outcomes.

Safety, Trust, and Real Risks

Anthropic has taken an unusually transparent approach by openly acknowledging Cowork’s dangers. Because the agent can take real actions, it introduces risks not present in normal chatbots.

Two major concerns stand out:

- Misinterpretation of instructions, which could result in destructive file operations

- Prompt injection attacks, where malicious instructions embedded in online content could influence the agent’s behavior

Anthropic claims it has built defenses against these risks, including isolation via a virtual machine and explicit permission boundaries. Still, the company strongly recommends using Cowork only on non-sensitive files and maintaining backups.

This honesty matters. AI agents are powerful, but they are not yet foolproof.

How Cowork Competes With Microsoft and Google

Cowork positions Anthropic directly against Microsoft Copilot and Google’s agent-based initiatives. The key difference is architectural philosophy.

Microsoft is embedding AI deep into the operating system. Anthropic, by contrast, is using controlled isolation — limiting access to defined folders and connectors. This reduces blast radius while preserving utility.

More importantly, Cowork evolved bottom-up. It wasn’t designed as a consumer assistant first. It emerged organically from how developers were already using Claude Code for non-coding tasks. That lineage gives Cowork more mature agentic behavior from day one.

Why Claude Cowork Actually Matters

Claude Cowork isn’t just another AI feature — it’s evidence that the bottleneck in AI adoption is shifting. The challenge is no longer intelligence, but trust, workflow integration, and control.

Anthropic is betting that users are ready to delegate real work to AI — not just ask questions. Whether mainstream adoption follows will depend on how well users adapt to giving precise instructions and managing risk.

One thing is certain: the chatbot era is ending. The agent era has already begun.